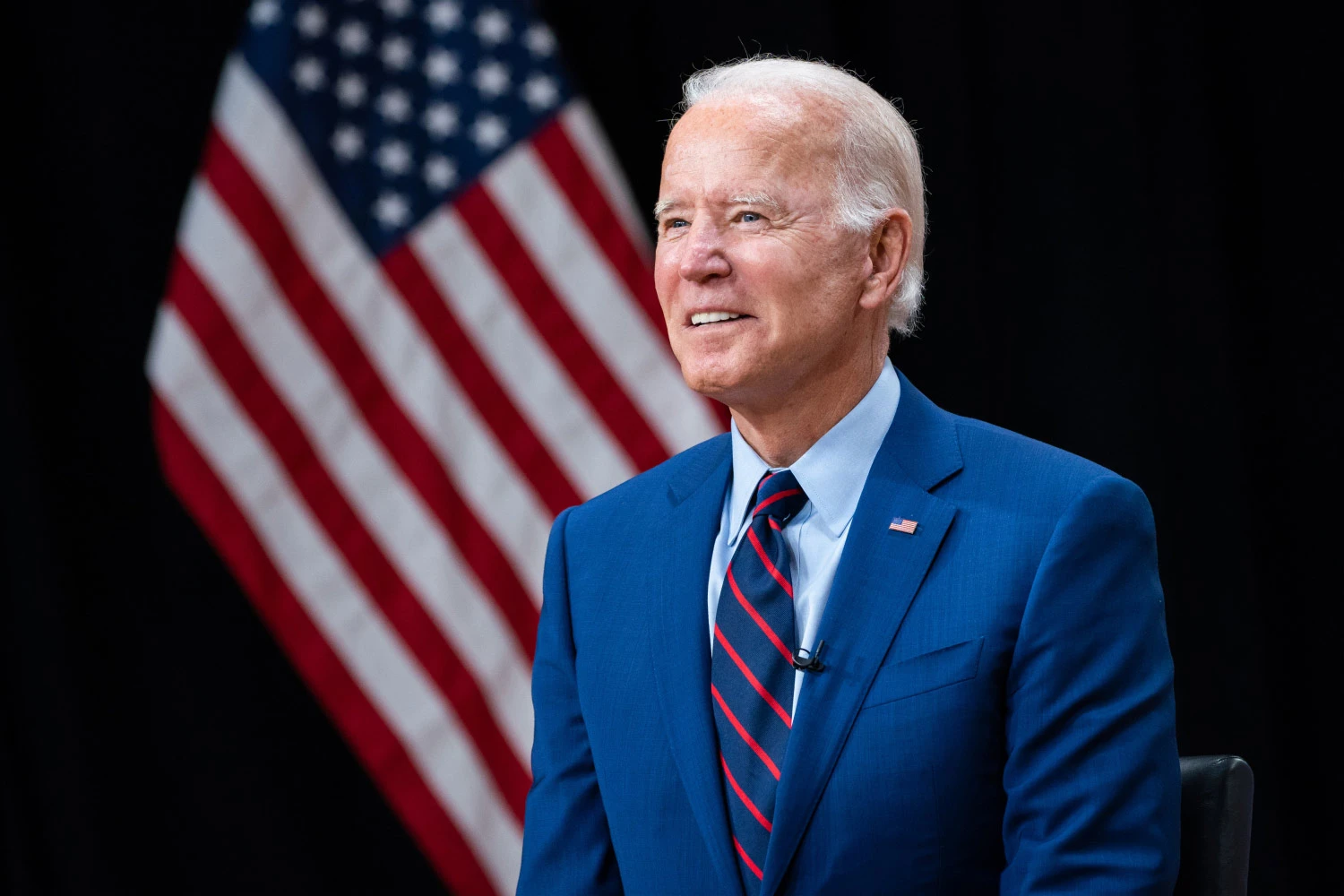

On October 30, President Joe Biden issued a comprehensive executive order aimed at safeguarding citizens, government entities, and companies by establishing stringent artificial intelligence (AI) safety standards.

This order introduces six new standards for AI safety and security, along with promoting ethical AI utilization within government agencies, all in alignment with the principles of “safety, security, trust, openness.”

Among its mandates, the executive order mandates the sharing of safety test results with officials for companies developing “foundation models posing significant risks to national security, economic security, or public health.”

It also emphasizes the acceleration of privacy-preserving techniques in AI development. However, the absence of specific implementation details has raised concerns in the industry about potential implications for top-tier model development.

Adam Struck, a founding partner at Struck Capital and an AI investor, highlighted the order’s seriousness in recognizing AI’s transformative potential across industries.

Yet, he noted the challenges faced by developers in predicting future risks based on assumptions about products that are not fully developed, particularly in the open-source community where the order lacks clear directives.

However, Struck also mentioned that the administration’s intention to manage these guidelines through AI chiefs and governance boards within regulatory agencies implies that companies operating within those agencies should adhere to regulatory frameworks that the government finds acceptable, emphasizing data compliance, privacy, and unbiased algorithms.

READ MORE: FTX Seeks Court Approval to Sell $744 Million in Trust Assets Amid Bankruptcy Proceedings

The government has already disclosed more than 700 use cases demonstrating its internal use of AI through the “ai.gov” website.

Martin Casado, a general partner at venture capital firm Andreessen Horowitz, expressed concerns about the executive order’s potential impact on open-source AI.

He, along with other AI researchers, academics, and founders, sent a letter to the Biden administration, asserting that open source is crucial to ensuring software remains safe and free from monopolies.

The letter criticized the order’s broad definitions of certain AI model types and raised concerns about smaller companies facing challenges in meeting requirements designed for larger firms.

Jeff Amico of Gensyn echoed these sentiments, calling the order detrimental to innovation in the U.S.

Matthew Putman, CEO and co-founder of Nanotronics, a global leader in AI-enabled manufacturing, stressed the need for regulatory frameworks that prioritize consumer safety and ethical AI development.

He urged regulators to consider the potential for overregulation, drawing parallels with the cryptocurrency industry.

Putman also emphasized that the fears of AI’s catastrophic potential are exaggerated, as the technology is more likely to bring positive impacts than destructive outcomes.

He pointed out that innovative AI applications, especially in advanced manufacturing, biotech, and energy, are driving a sustainability revolution with improved processes that reduce waste and emissions.

While the executive order is still fresh, the U.S. National Institute of Standards and Technology and the Department of Commerce have initiated the Artificial Intelligence Safety Institute Consortium, seeking members to contribute to AI safety efforts.

Discover the Crypto Intelligence Blockchain Council